Teaching the rubber duck to talk back

13 August 2024

How to use LLMs to improve your writing

Faced with a long text strewn as a horrible mess of convoluted syntax, long and rare words, trying to communicate complex ideas that would deserve a clear exposition, it’s tempting to yell at the author. Especially if the author is in the same room as yourself. But what if the “author” IS yourself?

In such a difficult situation, seeking reviews from others is ideal. Traditional reviews involve back-and-forth exchanges, and demand a fairly high effort from the victims to become really useful. A simpler alternative is reading aloud to a rubber duck, hoping for a fresh perspective to reveal errors. This method offers significant benefits such as avoiding human interaction and costs, but is less effective.

What if the rubber duck could talk back?

Could using LLMs (large language models, like ChatGPT) that provide insightful feedback save writers’ time and lives?

We’ll address the issues with them, the solution I wrote, and some early observations regarding their use.

Issues

Keeping personality

OpenAI’s ChatGPT is particularly known for its bloviated style, from which it cannot deviate. For example, the term “delve” now often indicates AI-generated drivel (which is a shame as I liked it!). There is evidence that this issue is caused by the censorship imposed on the models. Tests with llama and gemma2 did not suffer from this particular stylistic issue, but they do remove a lot of the flavor from the original. In other words, abusing it will give off an AI robotic vibe that detracts from quality.

Misinterpretations and general nonsense

Early tests reveal that despite improved syntax, comprehension can be lost. Sometimes because the meaning depended on a sub-meaning of a word that was switched for a mostly equivalent one. Other times because the meaning was dependent of the sentence structure, as it impacts the tone.

While comparisons between ‘intelligence’ and LLM abilities are fraught with issues and discrepancies, this raises warning signs. If an LLM struggles to understand a sentence, it indicates potential readability issues for humans as well. Even if the output is not usable in these cases, it highlights areas that may require more attention.

Getting the interface right

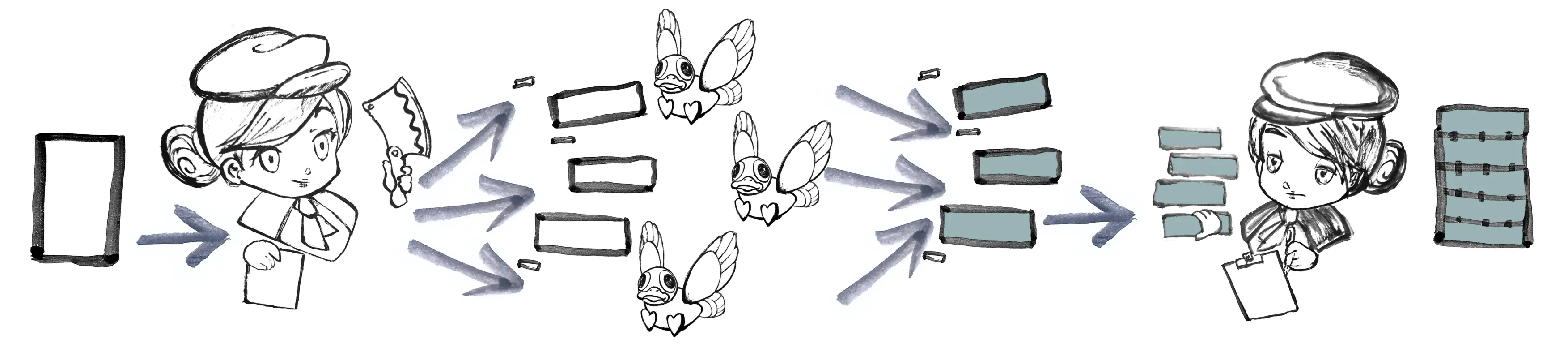

An external review aims to identify issues that might be hidden in our blind spots. Therefore, the idea is to run the LLM on the whole text. Instead of just reviewing suspect sections, we ask the LLM to read everything and evaluate each paragraph’s clarity. This allows us to prioritize the most unclear sections for revision and potentially receive suggestions for improvement. Clearer sections can then be quickly processed. This more parsimonious approach should in principle solve the personality problem.

However, calls to an LLM are costly, in either time, money, or both. Furthermore, its results are non-deterministic, and might fail to provide an accurate answer. Those issues mean that intermediary results of chunk processing should be stored, so that processing can take up where it left in case of crash. How did I come to this deduction? I wrote a naive script, and it crashed after 24 minutes. After a quick look, I opted for Luigi as a workflow management tool.

The base case with Luigi is to use local text files to store intermediary results, so a job has run if and only if the corresponding files exist on disk. The process involves splitting the text into chunks, each processed by a separate job. Finally, an aggregation job combines all results.

I have settled on two workflows:

- Sorting all rephrased chunks into a single file, prioritizing less clear ones.

- Committing individual chunk changes using git diff tools like delta, leveraging the familiar review workflow.

I thought this would be a simple python script, but there were more complexities than expected.

The repository: https://github.com/Le09/olluigi

There are existing solutions for “real” workflows, such as Flowise and RivetAI, which I didn’t investigate yet.

Tool roundup:

Conclusions?

It is still difficult to properly assess how useful this can be. Its lack of immediate obvious impact suggests it may not be a revolutionary game-changer. In a few months I will re-assess whether this warrants a simple update to this post or an entirely new follow-up.