Bullshit as gameplay: a revolution in video-games

21 September 2024

A new kind of AI-augmented visual novel where you have to bullshit your way into success

The game will soon be on Steam, where you can wishlist it, itch.io, and Github, for free. A playthrough should only last around 10 minutes.

There are games to learn a lot of subjects, like Japanese or programming, but there are few that teach about the value of cheating and lying. Why is such an essential component of daily life so underserved? One could argue it was because of a limitation of technology; it was not by lack of want, but a feasibility issue. Thanks to AI, nothing is out of reach anymore. From the applicant’s perspective, AI will help you come up with the perfect cover letter. From the recruiter’s perspective, it will rate applicants for you! In this experimental game, you will see both sides, so let’s dive in!

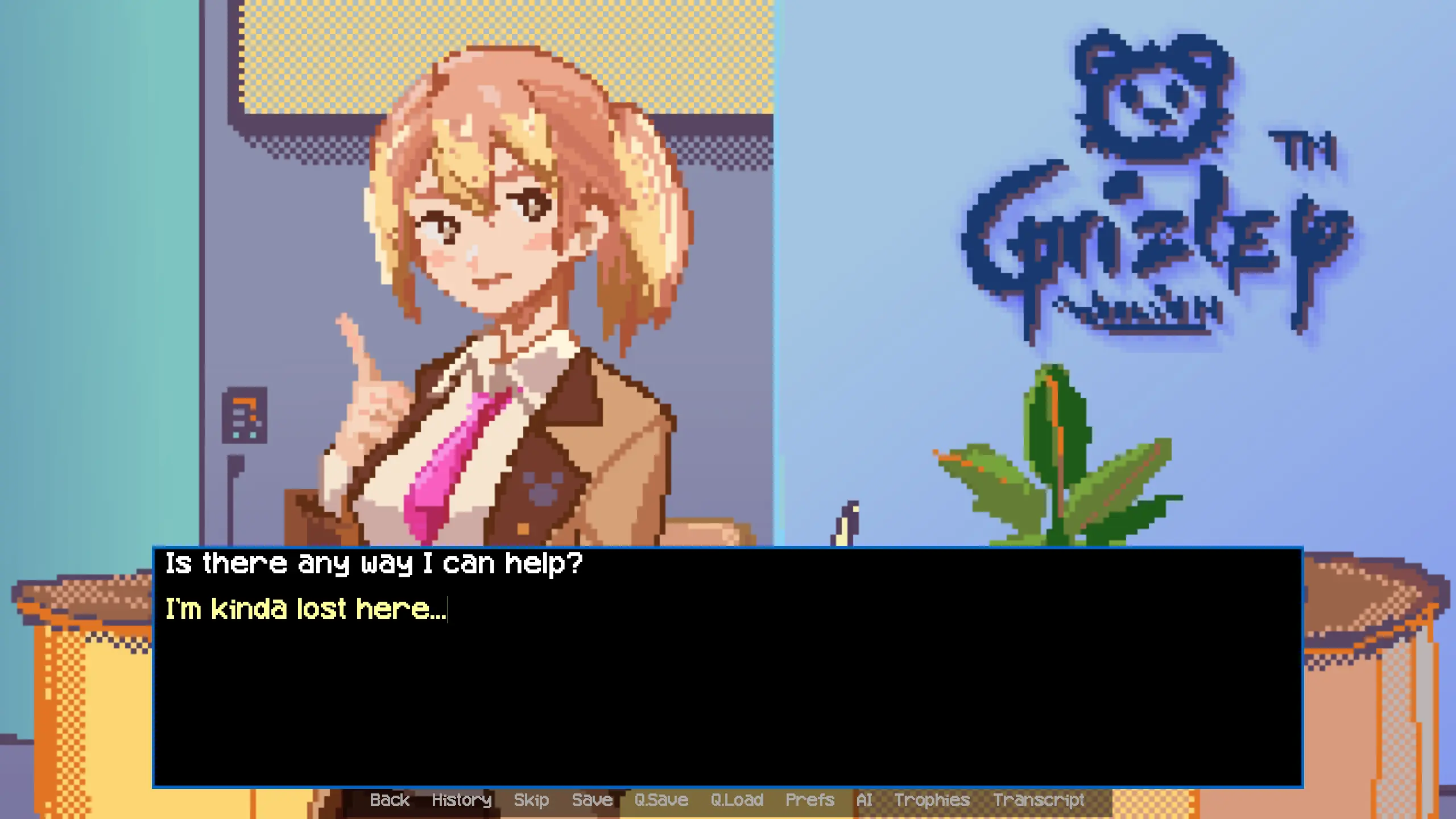

The game

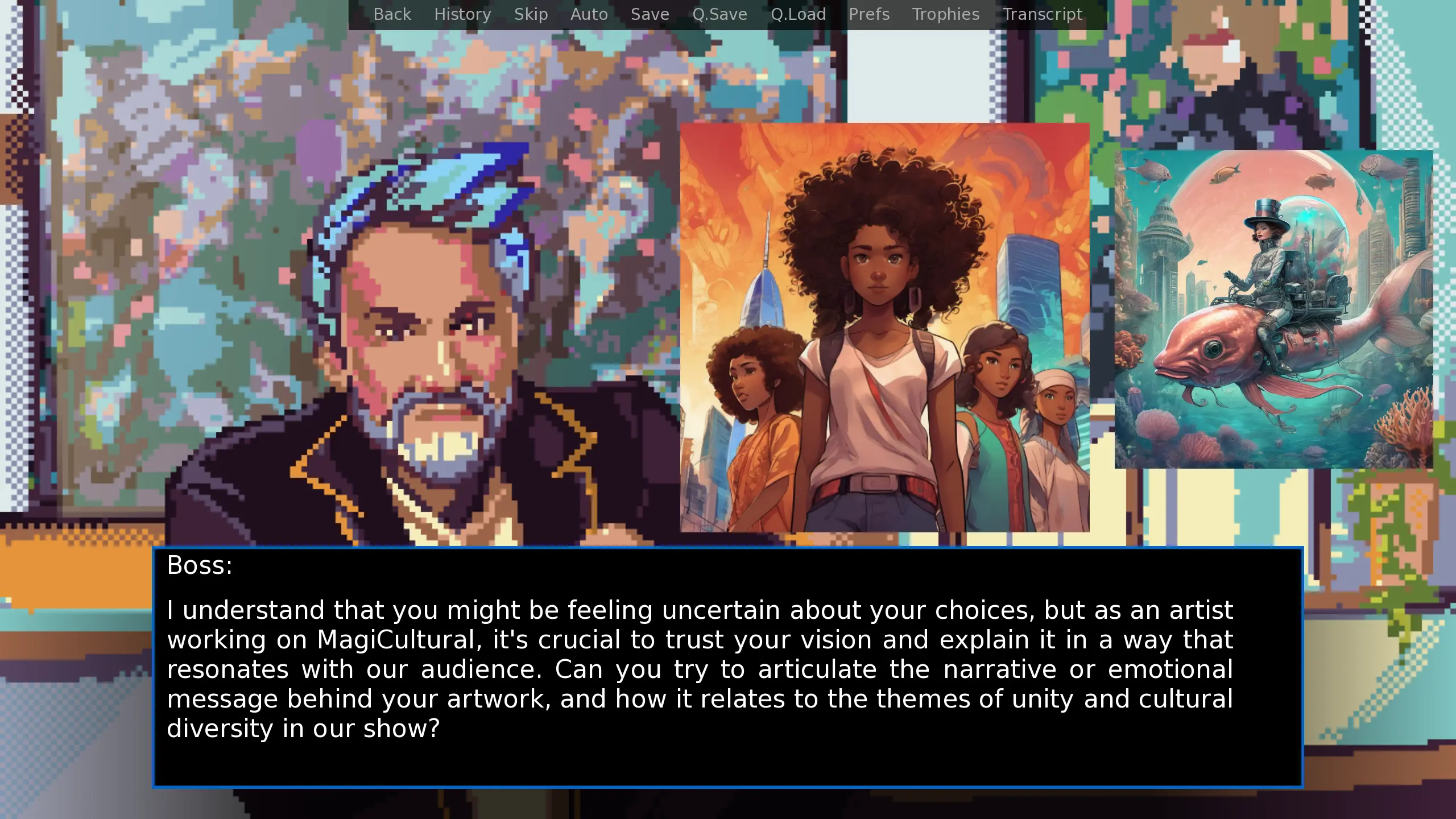

In the game, the totally fictitious game company Grizley is looking for concept designers to work on their new IP. Our hero submitted a portfolio generated by an AI, and got a job interview. However, the hero didn’t really read the series’ working idea, nor the portfolio that the AI submitted. When shown both the series artwork and the portfolio, you have to convince the interviewer that you are indeed competent enough to get the job.

Each playthrough is unique, as the series concept and portfolio images are generated randomly based on an idea given by the LLM1. Then, the LLM evaluates how convincing the player is at explaining how the portfolio was actually an artistic, conscious choice that is a meaningful addition to the series concept, to get accepted.

In other sections, the LLM is used to evaluate the player’s action, similarly to old parser-based games, but in a rather free-form way, which I believe achieves the true vision of these games.

Notes on design

(Illusion or) Immersion in video-games

Video games are all about creating the illusion of a virtual world; a digital Potemkin village2. To make it work, the player should be following the prescribed path, lest he would bump unto invisible walls. The metaphorical walls could be that the NPCs just react as finite-state automata. With LLMs, we can have text interactions that can be convincingly human. However, the longer the conversation goes and the more transparent the illusion tends to become.

To get a good experience, we thus focus on ideas that insert fairly short AI interactions within a traditional visual novel framework. This framework ensures that the general narrative is engaging, with natural interactions.

Interactive Fiction Lineage

Interactive Fiction is a genre with a rich history, see for instance 50 years of Text Games by Aaron A. Reed. While it is a niche genre, it allows for unbridled creativity. It therefore regularly encounters massive success (see for example Doki Doki Literature Club, Slay the Princess, Milk inside a bag of milk inside a bag of milk etc.). Interactive Fiction are often considered “complicated” because the user should be able to hold all the information in mind (or, God forbids, take written notes). The more “language based” tend to parse action of the form “VERB NOUN”. There is a layer of “artificial difficulty” in finding the right wording to perform an action. This is a two-sided difficulty as there is a need to encode each NOUN, each VERB and its synonyms, and the result of trying each such action (or answer “I don’t understand this action”, which gets old pretty fast). The best way to fill these in is to get the transcript of playtests to fill in the vocabulary, as players will think in their own way. It is a typical case of the Pareto rule, where 80% of the time spent is on 20% of the work.

The other issues which might require a lot of mental overhead, such as inventory, map, and history, can be solved by special commands. In other words, solving the interaction problem is solving the main problem with classical parser-based games.

For examples of such games, you can try our beginner-friendly ones, Feathery Christmas and CC’s Road to Stardom (which is a bit less typical as there aren’t really any inventory based puzzles).

Truly language-based games

Up to that, language-based video-games used techniques such as parsers, which only allow very specific inputs to work. That make them more puzzles than language games. Another approach is to get user inputs as a series of choices; in that case, this is not really a game but a branching fiction. With LLMs, we can create real single-player language-based video-games, which include lying games as a subset.

Notes on Development

Development was expected to take about a weekend, with a week to finish the project. It grew to one month before we knew it, and more than 180 commits.

Local AI vs Cloud services

Running things locally is nice, but my main teammate works with a toaster, so we had to find a cloud solution. Thankfully there are services that provide a free tier that is more than enough for our needs. In terms of performance, both are fast enough. To avoid the bottleneck with creating the images with SDXL, the jobs are sent at the beginning of the game, and the images are downloaded a bit later, so that there is no wait.

LLMs outputs are painfully brittle

This is, without a doubt, the biggest problem. A typical example would be to describe a potential case where the player is in the lobby, and is expected to go ask the secretary where the interview is, call that option (3). There are a few other things that the player character can do, including (1) being disruptive, (2) leaving the building, or just (4) do about nothing. The LLM tends to really want to move the story forward, so by inputing “Rehearse my notes”, a typical LLM answer would be “after checking my notes carefully, I move to the desk to talk to the secretary”. Of course, “checking notes” should have been 3) in this specific context. This is generally fixed by being strict in instructions to get option (3); the player must take explicit action.

In terms of the Pareto rule, fine-tuning the prompts is the bad half.

Note that in all cases the LLM is asked to respond with JSON, which fails quite often. It is crucial to implement an automatic retry mechanism to avoid getting bombarded with error messages.

Another problem that could be fixed by keeping explicit checkpoints is with rollbacks/rollforwards. Suppose the AI is tasked with estimating the confidence it should have that the hero made intentional choices, and that it starts at 0.5. In chat mode, subsequent interactions will take the previous confidence into account while evaluating the new confidence. Suppose our interaction brings us from 0.5 to 0.6. Then, rolling back and forward again will bring us to 0.7, as the LLM treats the interaction as improving the confidence, and does not care that it repeated the same question/answer. To avoid this, the whole context should be saved to be entirely given to the LLM each time. It is tricky as it requires to be quite careful, and makes for very bloated prompts.

Tuning difficulty

Before working on the game, I tried some interactive sessions with Ollama. This let me see that the concept was working as imagined. While testing the game, everything felt too easy, as I found a sure way to make everything work. While testing, I had trouble getting the okay ending; I know how to convince the AD, to be rude, but to have mildly acceptable answers presented a challenge. However, the first playtests from friends were not like that. They were confused and got the worst ending at least twice. I added a feature to get a transcript to be able to gather more insight.

The Ren’Py game engine

After some experiments, we decided on Ren’Py as game engine. It is Free and Open Source, in Python, and beginner-friendly. It has a lot of nice features out of the box, providing managed variables state, giving free rollback and rollforward of the game state, exports for major platforms, etc.

External libraries are not really supported

However, I would qualify Ren’Py as “Python-based” rather than a Python game engine.

There are a number of ways from which the project deviates from the standard.

Most notably, the package import rules are working quite differently, and as such it does not support the pydantic package, and thus any package depending on pydantic.

This includes the OpenAI python library.

There is a hack that allows for such packages to work with Ren’Py.

Create a virtual environment based on the same Python version as Ren’Py (3.9 as of version 8).

Next, install all required packages there.

Finally, in the Python initialization, add the virtualenv path to sys.path.

The hack works also when making the executable build, but not when building the web build.

However, the web build has limitations (like not having a network stack) that make it irrelevant for our needs.

Ultimately, the libraries could be replaced by less than 250 lines of code, so I removed all dependencies to eliminate this abomination from the code.

It’s sad to lose jsonschema to replace it by a basic function but this is a better choice in this context.

Displaying text is a surprising pain point

Ren’Py is a visual novel engine, so it should be good at displaying text. However, by design it expects text to be given in short lines, and to have almost no text input. Because our game required ‘arbitrarily long’ text as both input and output, we had to implement a custom text display to work around this. It uses a styling system very similar to CSS, which is powerful but has come of the same issues when working with the default template.

On the same space there is also the Twine engine, which I hesitate to test. There are some libraries to convert the states from Twine to Ren’Py, so I expect that the converse to be feasible.

Platform Distribution

Because of the need for requests and saving images, the web build is not an option. (I suppose it might be possible to hack something in js… but it does not feel like a good idea).

Our first playtester (actually the game musician) is a mac user. When provided with the mac build, he was unable to get the program to save files (or even create the session folder in its own directory). As I don’t run non-free OSes, I decided to give up on mac build that I wouldn’t be able to support. This motivated us to instead put the game on Steam to have an easy path to play.

A compendium of ideas

This is a nice tool that makes a smorgasbord of new possibilities with a very low barrier to entry.

Game ideas

My own brainstorming session, before settling on the job interview, was this:

- PR God: you are working for a huge company and need to defend them for their terrible blunder, without getting fired and while avoiding responsibility as much as possible. Sometimes the company gets hacked or leaks private medical info. It’s your job to make the

plebgeneral public understand that it’s not a big deal. - Way of success: you want to succeed in a fashion magazine. You need to provide work, choosing article topics and photographs to display, but you can also go full dating sim with your superiors to advance faster in the hierarchy.

- The Right Hand: You are the assistant of a senile president, and you want to take over the country. Worm your way into power, abuse his trust and sabotage other colleagues (the honest ones and the power hungry liars).

- Consciounaut: you can discuss with the outside world while exploring the unconscious mind of someone that can only communicate with symbolic images. You have to uncover the truth that hinders them.

- Art Gallerist: you get pictures and descriptions and must accept artists that are “authentic”.

- Faker Artist: you must peddle fake art to rich people for big money.

- Nigerian Prince: you must scam people into sending you a lot of money.

- Time Travel Imposter: you need to find fellow time travellers. You have to trick them into mentioning information they should not know.

- Social 11: social engineer your way into the bank.

- Spirit Talker: ghosts can talk to you in subtle ways. Can you decode their language so that they can RIP?

New gameplay features

There are a number of new technical possibilities that directly open up new gameplay features:

- NPC memory: interaction are evaluated to be stored in memory

- relationship parameters: each interaction might be evaluated to update the relationship parameters with the player (friendliness, trust, etc.)

- rumor system: discussing something (or someone!) with somebody might create a rumor about it. This could affect the relationship parameters, as well as major decisions (you won’t get the promotion if something horrible is circulating…).

- group conversations: interrupting or supporting a character would be judged by all other NPCs

With something like Way of success, where dating and office politics comingle, all these elements would be put to use effectively.

A bright future

Let’s see other people’s ideas!